The development of attention mechanisms and transformers has significantly advanced the field of natural language processing (NLP) and created large language models, with critical contributions from industry leaders like OpenAI and Meta. OpenAI’s groundbreaking GPT series, including the highly impactful ChatGPT, has demonstrated these models’ practical applications and versatility. Similarly, Meta’s LLaMA and especially open source LLaMA 2, represent another leap forward, showcasing cutting-edge research and diverse applications in natural language processing.

These innovations from OpenAI and Meta not only drive technological advancement but also set new benchmarks in the industry. As a result, each new release of open-source LLMs attracts considerable attention. Enthusiasts and professionals are keen to explore these models in a controlled environment, seeking hands-on experience to understand their capabilities and potential without the burden of complex setup requirements.

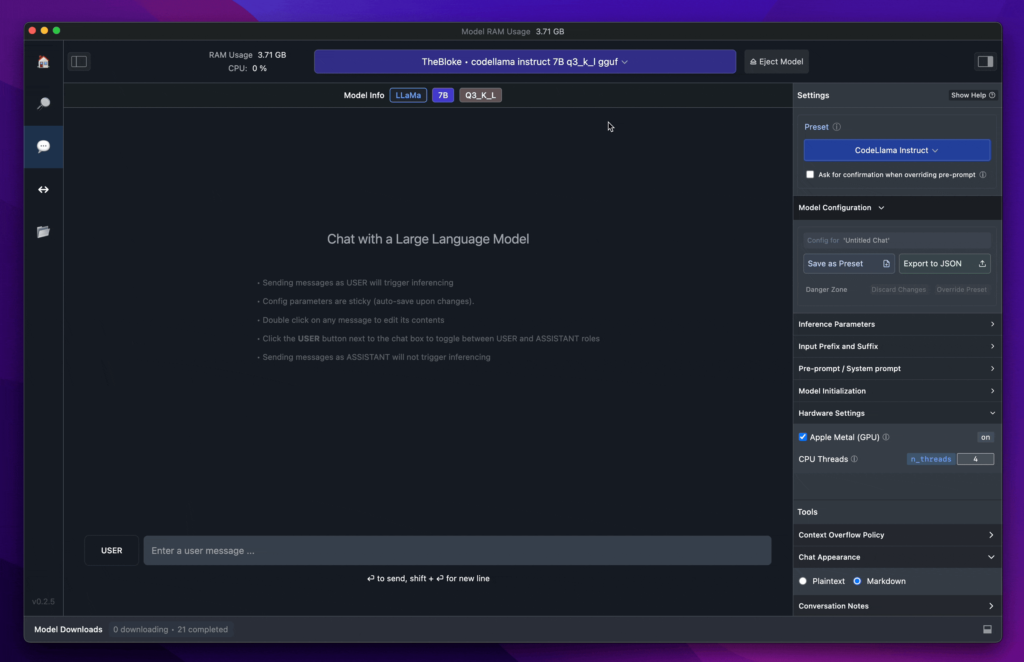

LM Studio

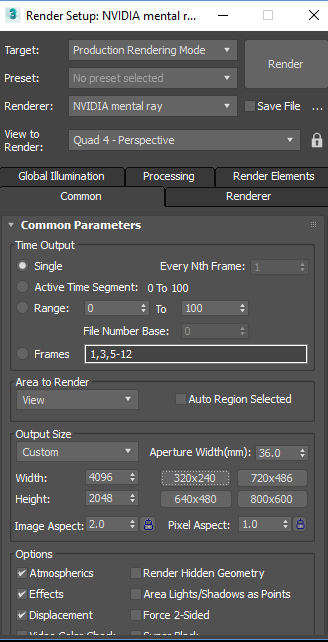

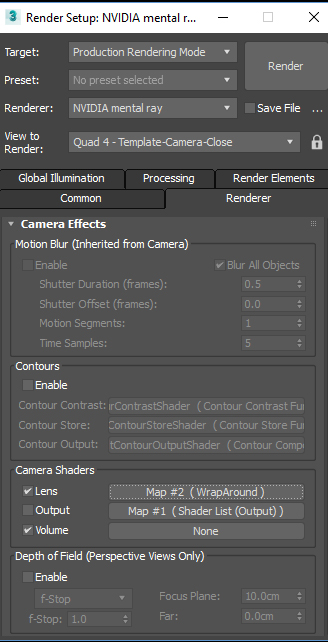

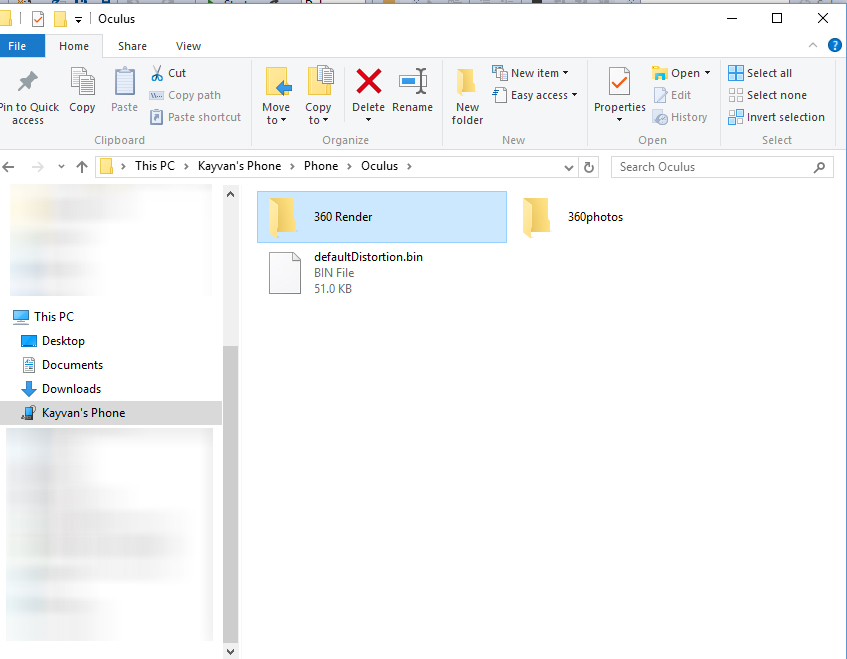

LM Studio is a desktop application designed to experiment with local and open-source large language models (LLMs). It’s a cross-platform app that allows users to download and run any ggml-compatible model from Hugging Face. The application offers a user-friendly interface for model configuration and inferencing and leverages the GPU of the device, if available, for enhanced performance.

LM Studio is particularly recommended for students and enthusiasts eager for hands-on experience with Large Language Models. Its user-friendly platform is ideal for those who wish to explore and experiment with various LLMs in a practical and accessible manner.